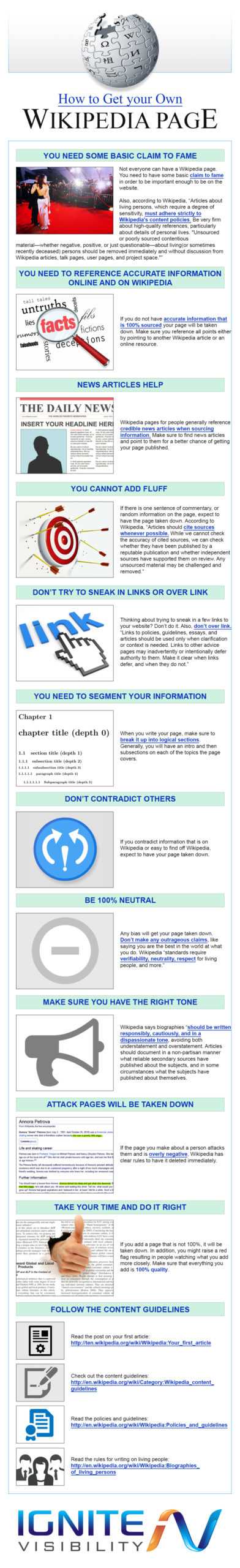

Wikipedia is a valuable source of information, with millions of articles on a wide range of topics. But have you ever wondered how all that information gets on the site in the first place? In this article, we’ll explore the various stages involved in creating a Wikipedia page and how you can potentially contribute to this vast collection of knowledge.

One important thing to note is that Wikipedia does not permit scraping of its articles. So, instead of using a scraper to extract information from existing Wikipedia pages, you will have to create a new article from scratch. This ensures that the information on Wikipedia is accurate, up-to-date, and reliable.

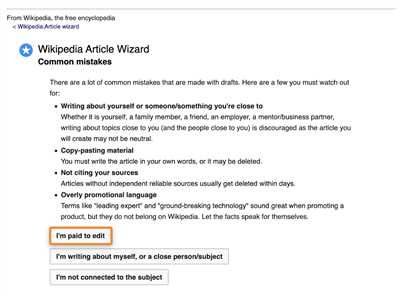

To create a Wikipedia page, you will need to have a registered account on the site. If you don’t have one, you can easily create a new account by following the instructions on the Wikipedia homepage.

Once you have an account, the first step is to familiarize yourself with Wikipedia’s policies and guidelines. Reading the FAQs and other helpful resources provided by Wikipedia will give you a better understanding of what is expected of you as a contributor.

Now that you have the necessary information, it’s time to start writing your article. You can create a new page by typing the desired title in the search bar on Wikipedia’s main page and clicking “Go.” If the page doesn’t exist, you will be directed to a blank page where you can start writing your article.

When writing your article, make sure to include relevant and reliable sources to support the information you provide. Citing your sources is an important part of maintaining the credibility of your article and avoiding potential issues with plagiarism.

Once you have finished writing your article, you can click on the “Show Preview” button to see how your page will look like on Wikipedia. This will give you an opportunity to make any necessary edits or improvements before publishing your article.

When you are satisfied with your article, click on the “Publish Changes” button to submit your article for review. The Wikipedia community will then review your article and make any necessary adjustments or corrections to ensure that it meets the site’s standards.

If your article is accepted, it will be published on Wikipedia for others to read and learn from. You will also have the opportunity to further contribute to Wikipedia by editing and improving existing articles or creating new ones.

So, if you have a story to share, information to provide, or tips to give, don’t hesitate to contribute to Wikipedia. Your knowledge and expertise can help make Wikipedia an even more valuable resource for people all over the world.

- How to Scrape Wikipedia Articles with Python

- Understanding the Guidelines

- Using a Python Scraper

- Important Considerations

- These updated features on Wikipedia will help you…

- Story by Paul Sawers

- The Importance of Scraping Wikipedia

- Getting Started with Scraping

- Helpful Tips and Features

- Why Scraping Wikipedia Can Be Useful

- One more thing

- FAQs

- Video:

- Demystifying Wikipedia: How to Create A New Page

How to Scrape Wikipedia Articles with Python

Scraping Wikipedia articles can be a valuable skill for anyone looking to gather information from this rich source of knowledge. By creating a web scraper using Python, you can automate the process of extracting data from Wikipedia pages and collect the information you need.

Understanding the Guidelines

Before you start scraping Wikipedia, it’s important to familiarize yourself with its guidelines. Wikipedia has a set of rules and policies on what can and cannot be done on the site, including scraping data. Make sure you read and understand these guidelines to ensure you’re respecting the site’s terms of use.

Using a Python Scraper

Python is a popular programming language for web scraping, thanks to its extensive libraries and tools. One such library is BeautifulSoup, which allows you to parse HTML and XML documents. By using BeautifulSoup in conjunction with Python’s requests module, you can effectively scrape Wikipedia articles.

To begin, install BeautifulSoup and requests using pip: pip install beautifulsoup4 requests

Once installed, you can start creating your Wikipedia scraper with Python. Here are some tips to help you get started:

- Import the necessary modules:

- Specify the URL of the Wikipedia page you want to scrape:

- Send a GET request to retrieve the HTML content of the page:

- Parse the HTML content using BeautifulSoup:

- Extract the desired information from the parsed HTML:

- Print or store the extracted information:

from bs4 import BeautifulSoup

import requests

url = 'https://en.wikipedia.org/wiki/Python_(programming_language)'

response = requests.get(url)

soup = BeautifulSoup(response.text, 'html.parser')

title = soup.find('h1').text

content = soup.find('div', {'id': 'mw-content-text'}).text

print('Title:', title)

print('Content:', content)

Important Considerations

When scraping Wikipedia articles or any other website, it’s crucial to be mindful of certain considerations:

- Respectful scraping: Make sure your scraper is not causing any harm to the website or violating any terms of use. Use appropriate delays between requests and avoid aggressive scraping techniques.

- Updated information: Keep in mind that Wikipedia articles can be updated regularly. If you need the most current information, consider implementing a mechanism to periodically scrape the page and retrieve the updated content.

- Third-party libraries: While BeautifulSoup is a popular choice for web scraping, there are other libraries and frameworks available in Python that can help you with scraping tasks. Explore different options and choose the one that best fits your needs.

By following these steps and considering the important factors, you can scrape Wikipedia articles effectively and gather the information you require for your project or research.

These updated features on Wikipedia will help you…

Wikipedia is a valuable resource for anyone seeking information on a wide range of topics. Whether you’re a student doing research for a paper or just curious about a particular subject, Wikipedia has you covered with its comprehensive collection of articles.

However, finding the information you need can sometimes be a challenge, especially when there are potentially thousands of pages on the same topic. Thankfully, Wikipedia has implemented some updated features to make your search easier and more efficient.

One of the most important features is the addition of third-party guidelines. These guidelines help ensure that the information on Wikipedia is accurate and reliable. They provide a set of rules and standards that contributors must follow when creating or updating articles. By adhering to these guidelines, you can be confident that the information you find on Wikipedia is trustworthy.

In addition to the guidelines, Wikipedia has also introduced a helpful article scraper tool. This tool allows you to easily scrape information from other websites and import it to your own Wikipedia page. Instead of manually copying and pasting text, you can now use this scraper tool to automatically import the information you need. This not only saves time, but also ensures accuracy and consistency across different articles.

Another updated feature on Wikipedia is the addition of “Stages” to articles. Stages let you see the development and progress of an article over time. You can view the different versions of an article and see how it has evolved. This feature is particularly useful when researching a topic that is constantly changing or when you’re interested in the history and development of an article.

Furthermore, Wikipedia now has an updated FAQ section. This section provides answers to commonly asked questions about using Wikipedia. If you’re unsure about how to navigate the site or need clarification on specific rules, the FAQ section is a great resource to consult.

Finally, Wikipedia has improved its search functionality. Now, when you search for a particular topic, you will see more relevant results. The search algorithm has been refined to better match your query with the most appropriate articles. This means that you can quickly find the information you’re looking for without wasting time sifting through irrelevant pages.

In conclusion, these updated features on Wikipedia will help you get the most out of the site. Whether you’re a casual user or a dedicated researcher, these features will make finding and accessing information easier and more efficient. So next time you’re on Wikipedia, take advantage of these features and enhance your knowledge journey.

Story by Paul Sawers

Paul Sawers is a writer and journalist who has written extensively on technology and internet-related topics. In November 2019, he wrote an article on The Next Web titled “How to Scrape Wikipedia with Python” that provided tips and guidelines for creating a scraper to extract information from Wikipedia pages.

The Importance of Scraping Wikipedia

Wikipedia is one of the most popular and widely used websites for information. It provides a wealth of knowledge on a wide range of topics, and its articles are constantly being updated and improved by a community of dedicated contributors. However, there may be situations where it is important to extract and analyze the content of Wikipedia articles for research, data analysis, or other purposes. This is where scraping Wikipedia can be a valuable tool.

Getting Started with Scraping

To scrape information from Wikipedia, Paul Sawers suggests using Python, a popular programming language that has powerful tools for web scraping. He provides a step-by-step guide on how to set up a scraper using Python libraries such as BeautifulSoup and requests.

One important thing to note is that scraping Wikipedia directly is against their terms of service. Instead, Sawers recommends using a third-party Python library called “Wikipedia-API” that provides a convenient way to access Wikipedia’s content within the limits of their guidelines.

In his article, Sawers guides readers through the stages of creating a scraper using the Wikipedia-API library. He explains how to retrieve information from specific pages, search for articles, extract sections of text, and more.

Helpful Tips and Features

Sawers also provides helpful tips and features that can enhance the scraping process. For example, he explains how to handle disambiguation pages, how to extract images and their metadata, and how to retrieve FAQs from Wikipedia articles.

He also addresses potential challenges and limitations of scraping Wikipedia, such as dealing with dynamic content, handling large volumes of data, and ensuring the reliability and accuracy of the extracted information.

Why Scraping Wikipedia Can Be Useful

Sawers highlights several reasons why scraping Wikipedia can be a useful technique. For researchers and data analysts, extracting data from Wikipedia can provide valuable insights and help in conducting studies and analyses.

Scraping Wikipedia can also be beneficial for content creators who rely on information from the site to create their own articles or supplement their existing content. By using a scraper, they can gather the information they need efficiently.

Furthermore, scraping Wikipedia can be a valuable resource for educational purposes. Teachers and students can use scraped data for research, projects, and presentations.

Overall, Paul Sawers’ article provides a comprehensive and informative guide on how to scrape Wikipedia using Python, highlighting the importance and benefits of this technique. Whether you are a researcher, content creator, or a student, this article can help you harness the wealth of knowledge that Wikipedia offers and leverage it for your own needs.

One more thing

When it comes to getting information from Wikipedia, there is an important thing to keep in mind. Instead of going through the process of creating a scraper to scrape the pages of a Wikipedia site, you can use the Wikipedia Python package. This package provides a set of features that will help you get the information you need from Wikipedia.

With the Wikipedia Python package, you can easily search for articles, retrieve their content, get information about the article’s title, author, and the date it was last updated. You can also find out which articles link to a specific page, access the page’s categories, sections, and more. This package will save you a lot of time and effort.

One of the great features of this package is that it provides guidelines on how to use Wikipedia’s API instead of directly scraping the HTML of Wikipedia pages. This is important because scraping the HTML of Wikipedia pages can potentially violate their Terms of Service.

So, if you’re looking to get information from Wikipedia using Python, be sure to check out the Wikipedia Python package. It will help you get the information you need in an easier and more efficient way.

These tips will surely help you get to the information you’re looking for on Wikipedia. By using the Wikipedia Python package, you can avoid the hassle of creating a scraper and potentially violating the Terms of Service of Wikipedia. Instead, you can rely on the functionality provided by the package to get the information you need.

So, the next time you find yourself in need of information from Wikipedia, don’t bother with creating a scraper. Just use the Wikipedia Python package and get the information you need quickly and easily.

FAQs

Here are some frequently asked questions about how to get to Wikipedia:

Q: Where can I find information about getting to Wikipedia?

A: You can find information about getting to Wikipedia by going to their official website. They have a lot of helpful tips and guidelines that can help you create your own scraper to get the information you need.

Q: How can I be sure that the information on Wikipedia is accurate and up to date?

A: Wikipedia relies on a community of volunteers to update and edit their articles. While they strive for accuracy, it’s always important to remember that anyone can potentially edit a Wikipedia article, so it’s a good idea to cross-reference the information you find on Wikipedia with other reliable sources.

Q: Can I scrape articles from Wikipedia to use on my own site?

A: While it is technically possible to scrape articles from Wikipedia and use them on your own site, it is generally not recommended. Wikipedia’s content is licensed under a Creative Commons license, which means that you must follow specific guidelines and attribute the content to the original authors if you want to use it. Instead, it’s better to create your own unique content.

Q: What are some important features of Wikipedia?

A: One of the most important features of Wikipedia is its community-driven nature. It allows anyone to contribute to the site and share their knowledge. Another important feature is the ability to create links within articles, which helps users navigate between related topics.

Q: Why did Paul Sawers title his story “Scraping Wikipedia with Python: A moral dilemma”?

A: Paul Sawers wrote a story about scraping Wikipedia with Python and he titled it “Scraping Wikipedia with Python: A moral dilemma” to highlight the potential ethical concerns associated with scraping third-party sites like Wikipedia. While scraping can be a useful tool, it is important to use it responsibly and respect the guidelines and policies of the websites you are scraping.

Q: Are there more FAQs about Wikipedia?

A: Yes, there are more FAQs about Wikipedia. However, these are some of the most common ones that people have when it comes to getting information from the site. If you have any specific questions, it’s always a good idea to visit Wikipedia’s official FAQ page for more help.