If you have ever wondered how websites like Amazon or IMDb collect data from various pages and sites, you’re in the right place. In this tutorial, we will teach you how to build a webscraper in Python using the BeautifulSoup library. Instead of manually collecting data from each page, a webscraper automates the process by making HTTP requests and extracting the desired information.

The first step in building a webscraper is understanding the structure of the website you want to scrape. In this example, we will scrape IMDb to collect data about movies. It’s important to note that not all websites allow scraping, so make sure to check the website’s terms of service before proceeding.

The BeautifulSoup library makes it easy to scrape data from websites. It provides a jQuery-like syntax to navigate and extract elements from the HTML code. While there are other libraries like jsoup for Java or built-in tools within web browsers like Chrome’s Inspect, BeautifulSoup is more familiar to Python users and makes scraping websites a breeze.

To start with the webscraping process, you will first need to install the BeautifulSoup package. You can do this by running a few lines of code in your Python software. Once you have installed the package, you can begin writing the scraper code.

In this tutorial, we will use a step-by-step approach to build the webscraper. We will teach you how to make HTTP requests, parse HTML code, loop through pages, and extract data from different elements. By the end of this tutorial, you will have a scraper that collects movie data and stores it in a Pandas DataFrame for further analysis.

Before we dive into the code, let’s go through a glossary of terms that you will encounter in this tutorial:

Webscraper: A software program that automates the process of collecting data from websites.

HTML: Hypertext Markup Language, the standard language for creating web pages.

HTTP: Hypertext Transfer Protocol, the protocol used for transmitting data over the web.

HTTP Request: A request sent by a client to a server to retrieve specific information.

BeautifulSoup: A Python library for parsing HTML and XML code, commonly used for webscraping.

Pandas: A popular Python library for data manipulation and analysis.

Notice that in the code above, they specifically use the .text attribute to extract the scraped data. This is useful when you want to extract text from an element, such as a quote or a movie title. Click on the Inspect button to show the HTML code of the element you want to scrape.

Python Web Scraping Tutorial – How to Scrape Data From Any Website with Python

In this tutorial, we will teach you how to build a webscraper using Python, specifically the BeautifulSoup library. Web scraping is a useful approach to collect data from various websites, and it allows you to extract specific information from web pages.

If you’re not familiar with web scraping or BeautifulSoup, here’s a quick glossary:

| Web scraping: | A technique of extracting data from websites using software packages |

| BeautifulSoup: | A Python library that makes it easy to scrape information from web pages |

Before we jump into coding, let’s understand the basic steps involved in web scraping:

- Send an HTTP request to the URL of the webpage you want to scrape

- Inspect the HTML of the webpage to identify the specific elements containing the data you need

- Use BeautifulSoup functions to extract the data from the HTML

- Collect the scraped data and store it in a structured format, like a dataframe

To illustrate this process, we will build a webscraper to scrape data from a movie website. Here’s how we can do it:

- First, we need to install the BeautifulSoup library. Open your terminal and run the following command:

pip install beautifulsoup4

- Now, let’s import the necessary libraries in our Python code:

import requests

from bs4 import BeautifulSoup

import pandas as pd

- We need to send an HTTP request to the movie website and get the HTML content. We can use the requests library to do this:

url = "http://www.example.com"

response = requests.get(url)

content = response.text

- Next, we’ll use BeautifulSoup to parse the HTML and show the extracted data:

soup = BeautifulSoup(content, "html.parser")

print(soup.prettify())

- Now that we have an understanding of the HTML structure, we can use BeautifulSoup’s JQuery-like functions to extract specific data. For example, to extract the titles of the movies, we can use the following code:

title_elements = soup.select(".movie-title")

titles = [element.text for element in title_elements]

print(titles)

- We can loop through the extracted elements and collect the data we need. Let’s say we want to scrape the movie titles, ratings, and release dates:

movies = []

for title_element in title_elements:

title = title_element.text

rating_element = title_element.find_next(".rating")

rating = rating_element.text

release_date_element = rating_element.find_next(".release-date")

release_date = release_date_element.text

movie = {"title": title, "rating": rating, "release_date": release_date}

movies.append(movie)

print(movies)

By following these steps, you can scrape data from any website within minutes. It’s important to notice that different websites may have different HTML structures, so you’ll need to inspect the HTML of each specific website you want to scrape.

This tutorial showed you a basic approach to web scraping using Python and the BeautifulSoup library. With the knowledge you gained here, you can build more specific and complex web scrapers for your needs. Happy scraping!

Requirements

Before you can start building a webscraper, there are a few requirements that you need to fulfill. Here are the essential elements you’ll need:

1. Python: Webscraping is typically done using programming languages like Python. If you are not familiar with Python, there are many online resources available to teach you the basics.

2. Beautiful Soup library: Beautiful Soup is a Python package that makes it easy to scrape information from web pages. It is powerful and flexible, allowing you to extract data from different websites.

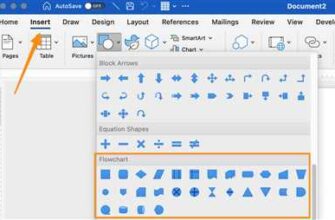

3. Chrome web browser: While Beautiful Soup can scrape from any web page, using the Chrome browser is recommended. Chrome has a built-in feature that allows you to inspect the HTML code and extract specific elements that you want to scrape.

4. Understanding of HTML and CSS: To build a webscraper, you need to have a basic understanding of HTML and CSS. This will enable you to identify the specific elements that you want to scrape.

5. Familiarity with libraries like pandas: Once you have extracted the data, you may want to store it in a structured format like a dataframe. Libraries like pandas make it easy to work with data in Python.

With these requirements in place, you’ll be ready to build your webscraper from scratch. In the next section, we will go through a step-by-step tutorial on how to use Beautiful Soup to scrape data from a specific website.

Loop Through Products

Once you’ve successfully extracted the data from a single page using a web scraping library like BeautifulSoup or jsoup, you’ll likely want to extract data from multiple pages.

To do this, you’ll need to loop through the pages and repeat the scraping process. Here’s how:

- First, you need to identify the specific elements on each page that contain the data you want to extract.

- Use the request library to send an HTTP request to the website you want to scrape.

- Inspect the web page’s HTML code to find the element or elements that contain the desired data.

- Use the scraping library’s functions and methods to extract the desired data from the HTML code of each page.

- Repeat steps 2-4 for each page you want to scrape.

- Collect the extracted data and store it in a data structure like a pandas DataFrame or any other suitable format.

Looping through products is a common task in web scraping. Websites often have a list or gallery of products, and you may want to collect specific information about each product, such as its name, price, and description.

For example, if you were building a movie scraper, you could loop through the movie listings on a movie website and extract information such as the movie title, release date, and actors.

Here’s an example code snippet in Python using the Beautiful Soup library:

import requests

from bs4 import BeautifulSoup

url = "http://www.example.com/movies"

response = requests.get(url)

soup = BeautifulSoup(response.text, "html.parser")

products = []

for product_node in soup.find_all("div", class_="product"):

name = product_node.find("h3").text

price = product_node.find("span", class_="price").text

description = product_node.find("p", class_="description").text

product = {

"name": name,

"price": price,

"description": description

}

products.append(product)

print(products)

This code will scrape the movie listings on the specified website, extract the name, price, and description for each movie, and store them in a list of dictionaries. You can then use this data in various ways, such as displaying it on a web page or analyzing it further.

In conclusion, looping through products is a fundamental aspect of web scraping. It allows you to collect data from multiple pages and automate the process of extracting information from websites. By following the above approach and using the right libraries and tools, you can build a web scraper that meets your specific requirements in just a few lines of code.

Build a Web Scraper with Python in 5 Minutes

If you’re familiar with Python and want to build a web scraper, there are several libraries and tools available that can help you accomplish this task. In this tutorial, we will specifically use the BeautifulSoup library, which is a Python package for parsing HTML and XML documents.

Web scraping is the process of extracting data from various websites, in a specific way that meets your requirements. It can be more efficient than manually scraping data from websites, especially for large amounts of data.

Before we dive into the code, let’s understand what a web scraper does. A web scraper is a software or a script that navigates through web pages, extracts data from them, and collects it for further use. In our case, we will be extracting movie data from a website.

The first step is to inspect the website you want to scrape using your favorite browser, such as Chrome. This will give you an understanding of the structure of the website and the elements you need to extract data from.

Next, we will write the Python code to scrape the website using BeautifulSoup. Here’s a code snippet that shows how to build a web scraper in Python:

import requests

from bs4 import BeautifulSoup

import pandas as pd

# Specify the URL of the website you want to scrape

url = "http://www.example.com"

# Send a GET request to the URL

response = requests.get(url)

# Parse the HTML content of the website

soup = BeautifulSoup(response.content, "html.parser")

# Find the specific element(s) you want to scrape

# Here, we are scraping a movie title and its release year

movie_title = soup.find("h1", attrs={"class": "movie-title"}).text

release_year = soup.find("span", attrs={"class": "release-year"}).text

# Create a pandas DataFrame to store the scraped data

data = pd.DataFrame({"Title": [movie_title], "Release Year": [release_year]})

# Print the scraped data

print(data)

As you can see, in just a few lines of code, we can scrape data from a specific website and store it in a pandas DataFrame. The BeautifulSoup library provides a jquery-like syntax to navigate and extract data from HTML documents.

Instead of manually collecting data from multiple pages, we can use a loop to scrape data from multiple pages within a website. This makes the web scraper more efficient and automated.

In conclusion, building a web scraper with Python is a quick and useful skill to have in your coding arsenal. It allows you to collect data from websites and automate the process of extracting and storing data. With the help of libraries like BeautifulSoup, you can accomplish this task in just 5 minutes.

Before you start scraping websites, it’s important to understand and respect the website’s terms of use. Make sure you’re not violating any rules or policies while scraping data.

I hope this tutorial was helpful in teaching you how to build a web scraper with Python. Happy scraping!

Glossary

– Web scraping: The process of extracting data from websites

– Beautiful Soup: A Python library for parsing HTML and XML documents

– Pandas: A Python library for data manipulation and analysis

– URL: Uniform Resource Locator, which is the address of a specific website or webpage on the internet

– GET request: A HTTP request method used to retrieve data from a server

– HTML: HyperText Markup Language, which is the standard markup language for creating web pages

– jQuery-like syntax: A syntax that resembles the jQuery library, which is a fast, small, and feature-rich JavaScript library

– Loop: A programming construct that repeats a group of instructions until a certain condition is met

– DataFrame: A 2-dimensional labeled data structure in pandas, similar to a table in a relational database

Quote by Jacob: “Web scraping is a powerful tool for collecting data from websites, and Python libraries like BeautifulSoup make it easy to build a web scraper that meets your specific requirements.”

Conclusion

In this tutorial, we have learned how to build a web scraper to collect data from various websites. We started with understanding the requirements and different approaches to web scraping. We explored libraries like Jsoup and Beautiful Soup, which are useful for scraping data from HTML pages.

We learned how to inspect web pages and find the specific elements we want to scrape using the Chrome browser’s developer tools. We also learned how to use CSS selectors and XPath to navigate and extract data from HTML nodes.

Using Jsoup, we saw how easy it is to make HTTP requests and scrape data from a web page. We used Jsoup’s built-in functions to extract data from specific elements and collect it into a DataFrame for analysis.

To scrape data using Python, we introduced the Beautiful Soup library. We saw how to collect data from websites by sending HTTP requests and navigating the DOM tree using Beautiful Soup’s jQuery-like syntax.

We also learned about the limitations and ethical considerations of web scraping. It’s important to respect website terms of service and not overwhelm servers with too many requests. It’s also necessary to handle dynamic pages and JavaScript-driven content using more advanced techniques, like the usage of headless browsers.

Conclusion

In conclusion, web scraping is a powerful tool for data collection and analysis. It allows us to extract valuable information from websites efficiently and automate the process of data collection. With the knowledge shared in this tutorial, you should now be familiar with the basics of web scraping and have the ability to build your own web scrapers to collect data from any website.

Remember to always be respectful and responsible when web scraping, and check the terms of service for any website you plan to scrape. Happy scraping!

Glossary

In this glossary, we will define and explain some of the key terms and concepts related to building a webscraper:

- Webscraper: A program or script that is designed to extract data from websites.

- Requirements: The necessary conditions or specifications that must be met in order to build a webscraper.

- Scratch: Starting from the beginning with no prior code or existing solutions.

- Specific website: A particular website that the webscraper is targeting to collect data from.

- Click: Interacting with a specific element on a webpage, usually through a simulated mouse click.

- Familiar: Being knowledgeable or experienced with a particular concept or tool.

- Scraping: The process of extracting data from a website.

- Inspect: Using developer tools or browser extensions to examine the underlying structure of a webpage.

- Loop: Repeating a set of instructions or code multiple times.

- Node: An individual element in the HTML structure of a webpage.

- Instead: In place of or as an alternative to something else.

- Jacob: A popular library for scraping websites with Python.

- BeautifulSoup4: A Python library specifically designed for parsing HTML and XML documents.

- Authors: The creators or developers of a piece of code, software, or library.

- Understanding: Comprehending or grasping the meaning or significance of something.

- Specifically: Particularly or precisely in relation to a particular case or situation.

- Library: A collection of pre-written code and functions that can be used to perform specific tasks.

- Code: A series of instructions or statements in a programming language.

- Above: Higher in position or value than something else.

- Pages: The individual sections or screens of a website.

- Here: In this location or place.

- Pandas: A powerful data manipulation library for Python.

- Collect: Gathering or accumulating data from various sources.

- Approach: A specific method or technique used to achieve a goal or solve a problem.

- You’re: Contraction of “you are”.

- Libraries: Collections of code or functions that can be imported and used in a program.

- A: A general term indicating any one or more of a group.

- Jsoup: A Java library for extracting and manipulating HTML data.

- Extracting: Obtaining or isolating specific information or data from a larger source.

- Attributes: Characteristics or properties of an HTML element.

- Built: Created or constructed.

- Data: Information or facts that are collected or stored for reference or analysis.

- Functions: Reusable blocks of code that perform specific tasks.

- Python: A popular programming language known for its simplicity and readability.

- Different: Not the same or not alike.

- That: Referring to a specific thing or situation.

- Three: The number 3.

- Lines: Sequences of characters in a text file, typically ending with a newline character.

- Notice: To become aware of or observe.

- Through: Moving in one side and out of the other side of an object or place.

- Movie: A motion picture or film.

- That’s: Contraction of “that is”.

- Any: One or some, irrespective of how many or how few.

- Products: Items or goods that are made or manufactured.

- Package: A collection of related files and modules that can be easily distributed and installed.

- Sites: Websites or online locations where information or services can be found.

- BeautifulSoup: A Python library for parsing HTML and XML documents.

- Minutes: Units of time equal to 60 seconds.

- Various: Several or many different things or possibilities.

- From: Indicating the point in space at which a journey begins.

- Build: Construct or create something by putting parts or materials together.

- -: Hyphen, emphasizing a pause, break, or subtraction.

- Websites: Online platforms or locations that can be accessed through the internet.

- Quote: To repeat the exact words that someone else has said or written.

- Scraped: Extracted or collected using a webscraper.

- Useful: Able to be used effectively or for a particular purpose.

- Teach: To impart knowledge or skills to someone through instruction or example.

- How: In what way or manner; by what means.

- Element: A part or component of a larger whole.

- HTTP: Hypertext Transfer Protocol, a protocol for transferring information on the World Wide Web.

- Request: An act of asking politely or formally for something.

- Show: To make something visible or display it to others.

- Dataframe: A two-dimensional data structure in Pandas, similar to a table in a database.

- Scrape: To extract or gather data from a website using a scraper.

- In: Expressing inclusion or involvement.

- JQuery-like: Similar to or resembling the syntax and functionality of jQuery.

- Conclusion: The end or final part of something.

- Makes: To cause something to exist or come into being.

- Collected: Gathered or accumulated from various sources.

- Chrome: A popular web browser developed by Google.

- Web: Short for World Wide Web, the system of interlinked hypertext documents accessed via the internet.

- Tutorial: A set of instructions or a lesson on how to do something.